Connectionist

Introduction

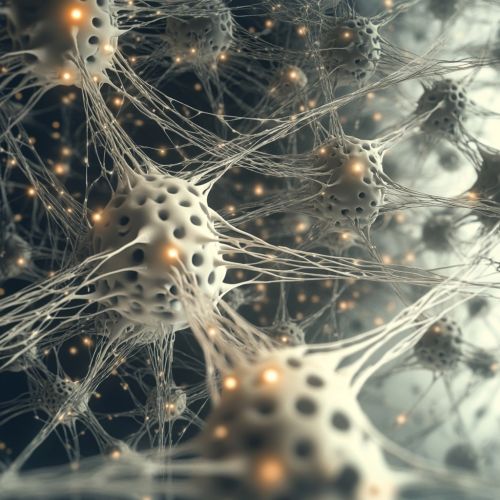

Connectionism is a theoretical framework for understanding the mind and brain that relies on artificial neural networks (ANNs) to model mental and behavioral phenomena. This approach is rooted in the idea that cognitive processes can be understood in terms of networks of simple units (neurons) and their connections. Connectionist models are used extensively in cognitive science, psychology, neuroscience, and artificial intelligence (AI) to simulate and understand various aspects of human cognition, including learning, memory, perception, and language.

Historical Background

The origins of connectionism can be traced back to the early 20th century with the work of psychologists such as Edward Thorndike and his Law of Effect, which posited that behaviors followed by satisfying outcomes are more likely to be repeated. The development of the Perceptron, an early type of artificial neural network, by Frank Rosenblatt in the 1950s marked a significant milestone in the field. The perceptron was designed to mimic the way the human brain processes visual information.

In the 1980s, connectionism gained renewed interest with the advent of parallel distributed processing (PDP) models, popularized by researchers such as David Rumelhart, James McClelland, and the PDP Research Group. These models emphasized the importance of distributed representations and learning algorithms such as Backpropagation for training neural networks.

Core Concepts

Neural Networks

At the heart of connectionism are artificial neural networks, which consist of interconnected units (neurons) organized into layers. Each neuron receives input from other neurons, processes this input, and transmits the result to other neurons. The strength of the connections between neurons, known as weights, determines the network's behavior and learning capabilities.

Learning Algorithms

Connectionist models rely on learning algorithms to adjust the weights of the connections between neurons. One of the most widely used algorithms is backpropagation, which involves propagating the error signal backward through the network to update the weights. Other learning algorithms include Hebbian Learning, which is based on the principle that neurons that fire together wire together, and Reinforcement Learning, which involves learning from the consequences of actions.

Distributed Representations

Connectionist models often use distributed representations, where information is represented by patterns of activation across multiple neurons rather than by individual units. This allows for more robust and flexible representations of information, as well as the ability to generalize from limited data.

Parallel Processing

Connectionist models operate on the principle of parallel processing, where multiple computations are carried out simultaneously. This is in contrast to traditional symbolic models, which rely on sequential processing. Parallel processing allows connectionist models to efficiently handle complex and large-scale cognitive tasks.

Applications

Cognitive Science

In cognitive science, connectionist models have been used to simulate a wide range of cognitive processes, including Memory, Perception, and Language Acquisition. For example, connectionist models have been used to explain how children learn to recognize and produce speech sounds, how they acquire vocabulary, and how they develop grammatical knowledge.

Neuroscience

Connectionist models have also been applied to the study of the brain and its functions. These models provide insights into how neural circuits in the brain give rise to cognitive processes and behaviors. For example, connectionist models have been used to study the neural basis of learning and memory, as well as the mechanisms underlying Neuroplasticity.

Artificial Intelligence

In the field of artificial intelligence, connectionist models have been used to develop a wide range of applications, including Image Recognition, Natural Language Processing, and Robotics. Deep learning, a subfield of machine learning that involves training large-scale neural networks, has achieved remarkable success in recent years, leading to significant advancements in AI capabilities.

Criticisms and Limitations

Despite their successes, connectionist models have faced several criticisms and limitations. One major criticism is that these models often lack interpretability, making it difficult to understand how they arrive at their decisions. Additionally, connectionist models can require large amounts of data and computational resources to train effectively.

Another criticism is that connectionist models may struggle with tasks that require symbolic reasoning and manipulation, such as solving mathematical problems or understanding complex logical relationships. Some researchers argue that a hybrid approach, combining connectionist and symbolic models, may be necessary to achieve a more comprehensive understanding of cognition.

Future Directions

The field of connectionism continues to evolve, with ongoing research aimed at addressing its limitations and expanding its applications. One promising direction is the development of more interpretable neural network models, which can provide insights into the underlying mechanisms of cognition. Additionally, researchers are exploring ways to integrate connectionist models with symbolic reasoning to create more powerful and flexible cognitive architectures.

Another area of interest is the application of connectionist models to understand and treat neurological and psychiatric disorders. By modeling the neural circuits involved in these conditions, researchers hope to develop more effective interventions and therapies.

See Also

- Artificial Neural Network

- Deep Learning

- Parallel Distributed Processing

- Hebbian Learning

- Reinforcement Learning

- Neuroplasticity

- Image Recognition

- Natural Language Processing

- Robotics