Artificial Neural Network

Introduction

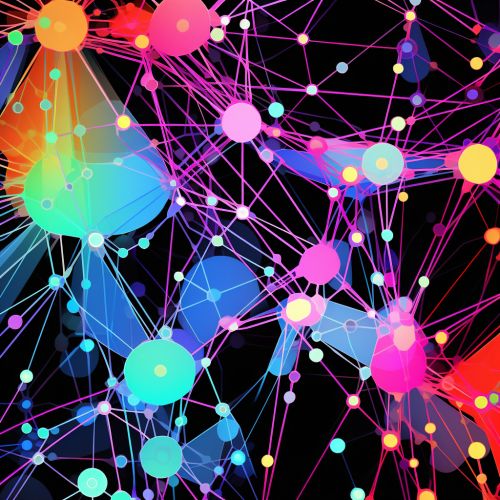

An artificial neural network (ANN) is a computational model inspired by the structure of biological neural networks. These networks are designed to simulate the behavior of biological systems composed of "neurons". ANNs are composed of nodes, which are divided into three layers: the input layer, the hidden layer, and the output layer. Each node in one layer is connected to each node in the next layer, with these connections, or edges, having associated weights that are adjusted during learning.

Structure and Functioning

An ANN is organized in layers, starting with the input layer, through the hidden layer(s), and ending with the output layer. The input layer receives raw input, similar to the sensory data in biological organisms. The hidden layer(s) perform computations on the inputs, and the output layer produces the final result.

Each node, or artificial neuron, in the network functions by receiving input from either the raw data or the preceding layer of nodes. This input is then processed using a weighted sum, which is passed through a activation function to determine the node's output. This output is then passed to the next layer of nodes.

Learning Process

The learning process in an ANN involves adjusting the weights of the connections in the network. This is typically done using a method called backpropagation, which involves propagating the error of the network's output back through the network to adjust the weights.

The backpropagation algorithm works by calculating the gradient of the error function with respect to the weights of the network. This gradient is then used to update the weights in a direction that minimizes the error. This process is repeated for many iterations, or epochs, until the network's performance on the training data is satisfactory.

Types of Artificial Neural Networks

There are many different types of ANNs, each with its own specific use case. Some of the most common types include:

- Feedforward Neural Networks: These are the simplest type of ANN, where information only travels forward in the network (from input to output).

- Radial Basis Function (RBF) Neural Networks: These networks use radial basis functions as activation functions. They have their applications in power restoration systems, real-time face recognition and are used in many other pattern recognition tasks.

- Recurrent Neural Networks (RNNs): These networks have connections that form directed cycles. This creates a form of internal memory which allows them to exhibit dynamic temporal behavior.

- Convolutional Neural Networks (CNNs): These are a type of feedforward network that are especially useful in image recognition tasks due to their ability to take advantage of the spatial structure of the data.

Applications of Artificial Neural Networks

ANNs have a wide range of applications in various fields. They are used in machine learning, pattern recognition, and artificial intelligence, among other fields. Some specific applications include:

- Speech recognition: ANNs are used to transcribe spoken words into written text.

- Image recognition: ANNs, especially CNNs, are used to identify objects, places, people, writing, and actions in images.

- Natural language processing: ANNs are used to understand and respond to text in a human-like manner.

- Medical diagnosis: ANNs are used to identify patterns in medical data to help diagnose diseases.

Limitations and Criticisms

While ANNs have proven to be powerful tools, they are not without their limitations and criticisms. Some of these include:

- Overfitting: This occurs when an ANN learns the training data too well, to the point where it performs poorly on new, unseen data.

- Interpretability: ANNs, particularly deep networks, are often criticized for being "black boxes," as their internal workings can be difficult to interpret.

- Computationally expensive: Training ANNs, particularly large ones, can be computationally expensive in terms of both time and resources.

See Also

- Deep Learning - Machine Learning - Artificial Intelligence