Hebbian Learning

Introduction

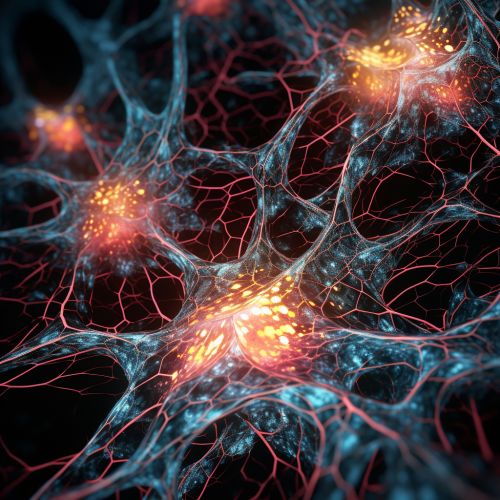

Hebbian learning is a theory proposed by Donald O. Hebb that provides a mechanism for synaptic plasticity, where an increase in synaptic efficacy arises from the presynaptic cell's repeated and persistent stimulation of the postsynaptic cell. This theory is often summarized by the phrase "Cells that fire together, wire together." This concept is foundational in the field of neuroscience, particularly in understanding the adaptation of neurons in the brain during the process of learning.

Hebb's Postulate

Hebb's postulate, also known as Hebb's rule, states that the simultaneous activation of cells leads to pronounced increases in synaptic strength between those cells. It provides a biological basis for learning and memory in the brain. The postulate is used in artificial intelligence and machine learning to adjust the weights of artificial neurons.

Biological Basis of Hebbian Learning

The biological basis of Hebbian learning involves long-term potentiation (LTP) and long-term depression (LTD). LTP is a long-lasting strengthening of synapses between two neurons that results from stimulating them synchronously. It is one of several phenomena underlying synaptic plasticity, the ability of synapses to change their strength. On the other hand, LTD is a long-lasting decrease in synaptic strength that occurs when neurons are activated asynchronously.

Hebbian Learning in Artificial Intelligence

Hebbian learning has been applied in the field of artificial intelligence. It is used in unsupervised learning algorithms, such as neural networks, to adjust the weights of the artificial neurons. The Hebbian learning rule is used in the training of neural networks to make the networks better at tasks like pattern recognition and data classification.

Hebbian Learning and Memory

Hebbian learning plays a crucial role in memory formation and consolidation. The strengthening of synapses, as postulated by Hebb, is the basis for the creation of memory traces in the brain. This synaptic strengthening over time, also known as memory consolidation, is what allows for the long-term storage of memories.

Criticisms and Limitations of Hebbian Learning

While Hebbian learning provides a robust model for understanding synaptic plasticity and memory formation, it is not without its criticisms and limitations. One of the main criticisms is that it does not account for the role of inhibitory synapses in learning and memory. Furthermore, Hebbian learning can lead to runaway excitation in neural networks, where neurons become overly active, leading to a state of saturation where no further learning can occur.