Parallel Distributed Processing

Introduction

Parallel Distributed Processing (PDP) is a computational model that represents information and processes it in a parallel manner. It is a type of neural network model that is widely used in artificial intelligence and cognitive science research.

Overview

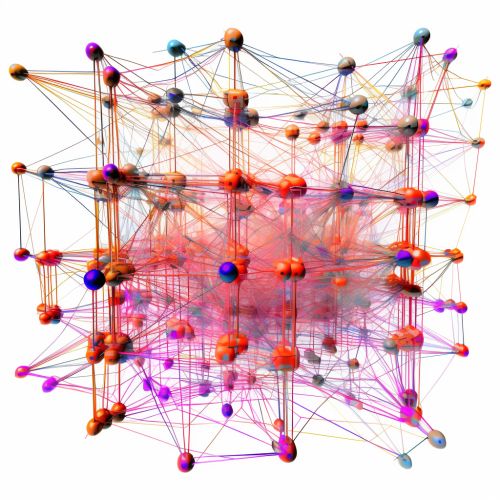

PDP models are based on the concept of distributed representation, where knowledge is represented across many different units in the network. This is in contrast to local representation, where each unit in the network represents a single concept or piece of information. PDP models are also characterized by their parallel processing capabilities, where multiple computations can be performed simultaneously.

History

The concept of PDP emerged in the 1980s as a part of the connectionist movement in cognitive science. This movement was a reaction against the symbolic models of cognition that dominated the field at the time. The PDP approach was inspired by the structure and function of the brain, and it aimed to provide a more biologically plausible model of cognition.

Structure of PDP Models

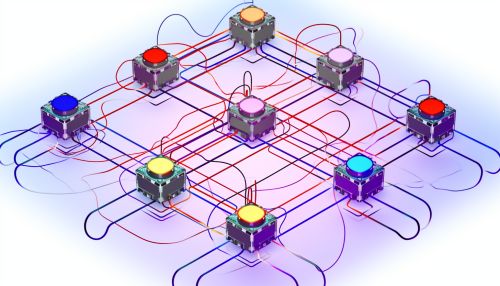

A PDP model consists of a large number of simple processing units, often referred to as nodes or neurons. These units are interconnected in a network, and each connection has a weight associated with it. The weights determine the influence of one unit on another. The units in a PDP model are typically organized into layers, including an input layer, one or more hidden layers, and an output layer.

Functioning of PDP Models

In a PDP model, information is processed by propagating activation through the network. When a unit receives input, it computes an output based on its activation function. The output is then passed on to the units it is connected to. This process continues until the output layer is reached. The activation function of a unit is typically a nonlinear function, such as the sigmoid or hyperbolic tangent function.

Learning in PDP Models

Learning in PDP models occurs through a process called backpropagation. In this process, the model is presented with a set of input-output pairs, and the weights of the connections are adjusted to minimize the difference between the model's output and the desired output. The adjustments are made based on the gradient of the error with respect to the weights, which is computed using the chain rule of differentiation.

Applications of PDP Models

PDP models have been used in a wide range of applications, including pattern recognition, speech recognition, and natural language processing. They have also been used to model various cognitive processes, such as memory, perception, and language comprehension.

Advantages and Limitations of PDP Models

One of the main advantages of PDP models is their ability to learn from examples and generalize from them. They are also robust to noise and can handle incomplete or ambiguous input. However, PDP models also have some limitations. They require a large amount of data to learn effectively, and they can be difficult to interpret due to their distributed representation.