Kernel trick

Introduction

The kernel method is a technique that uses a kernel function to transform data into a higher-dimensional space, making it possible to perform linear separations in that space. This method is widely used in machine learning, particularly in support vector machines (SVMs), to handle non-linearly separable data.

Kernel Functions

A kernel function is a mathematical function that computes the dot product of two vectors in a high-dimensional space. This function is typically used to measure the similarity or distance between pairs of data points. The kernel function can be any function that satisfies the Mercer's condition, which ensures that the kernel matrix is symmetric and positive semi-definite.

The Kernel Trick

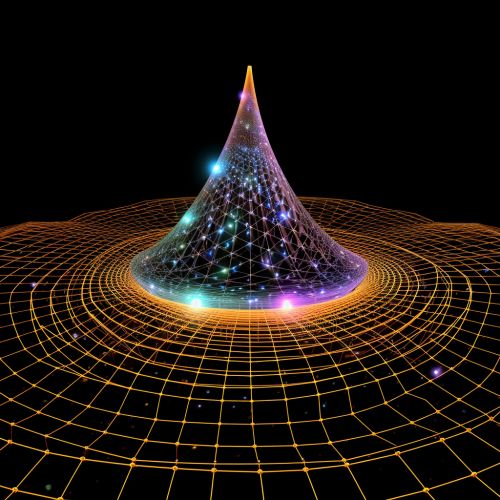

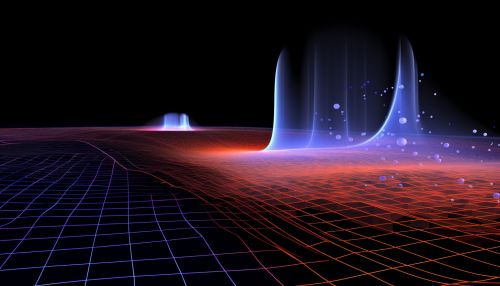

The kernel trick is a method that allows linear algorithms to be applied to non-linear problems. It does this by implicitly mapping the input data into a higher-dimensional space where the data is linearly separable. This mapping is done through a kernel function, which computes the dot product of the transformed vectors in the high-dimensional space.

The kernel trick is particularly useful because it allows us to perform computations in the high-dimensional space without explicitly knowing the mapping or even the dimensions of that space. This is possible because the kernel function computes the dot product of the transformed vectors directly from the original vectors, without needing to know the transformation itself.

Applications of the Kernel Trick

The kernel trick is widely used in machine learning, particularly in support vector machines. SVMs are a type of supervised learning model that can be used for classification, regression, and outlier detection. The kernel trick allows SVMs to handle non-linearly separable data by transforming it into a higher-dimensional space where it is linearly separable.

The kernel trick is also used in other machine learning algorithms, such as principal component analysis (PCA), canonical correlation analysis (CCA), and ridge regression. In these algorithms, the kernel trick allows for non-linear transformations of the data, which can lead to better performance.

Types of Kernel Functions

There are several types of kernel functions that can be used with the kernel trick. These include:

- Linear kernel: This is the simplest type of kernel, which does not perform any transformation on the data. It simply computes the dot product of the input vectors.

- Polynomial kernel: This kernel computes the dot product of the input vectors, raises it to a specified power, and adds a constant. This allows for polynomial transformations of the data.

- Radial basis function (RBF) kernel: This kernel computes the Euclidean distance between the input vectors, squares it, and applies an exponential function. This allows for radial transformations of the data.

- Sigmoid kernel: This kernel computes the dot product of the input vectors, scales it, and applies a sigmoid function. This allows for sigmoid transformations of the data.

Conclusion

The kernel trick is a powerful technique in machine learning that allows linear algorithms to be applied to non-linear problems. By using a kernel function to transform the data into a higher-dimensional space, the kernel trick makes it possible to perform linear separations in that space. This method is widely used in support vector machines and other machine learning algorithms to handle non-linearly separable data.