Principal Component Analysis

Introduction

Principal Component Analysis (PCA) is a statistical procedure that uses an orthogonal transformation to convert a set of observations of possibly correlated variables into a set of values of linearly uncorrelated variables called principal components. This transformation is defined in such a way that the first principal component has the largest possible variance, and each succeeding component in turn has the highest variance possible under the constraint that it is orthogonal to the preceding components. The resulting vectors are an uncorrelated orthogonal basis set. PCA is sensitive to the relative scaling of the original variables.

Mathematical Definition

PCA is defined mathematically as an orthogonal linear transformation that transforms the data to a new coordinate system such that the greatest variance by some projection of the data comes to lie on the first coordinate (called the first principal component), the second greatest variance on the second coordinate, and so on.

Consider a data matrix, X, with column-wise zero empirical mean (the sample mean of each column has been shifted to zero), where each of the n rows represents a different repetition of the experiment, and each of the p columns gives a particular kind of feature (say, the result from a particular sensor).

Mathematically, the transformation is defined by a set of p-dimensional vectors of weights or loadings loadings that map each row vector of X to a new vector of principal component scores, given by

y_k = x_k W,

in terms of the variables (columns) of X.

Applications of PCA

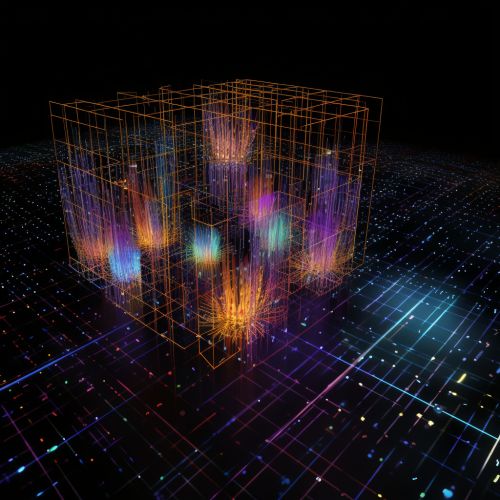

PCA is predominantly used as a dimensionality reduction technique in domains like facial recognition, computer vision and image compression. It is also used for finding patterns in data of high dimension in the field of finance, data mining, bioinformatics, psychology, etc.

PCA Algorithm

The PCA algorithm can be summarized in the following steps:

1. Standardize the data. 2. Obtain the Eigenvectors and Eigenvalues from the covariance matrix or correlation matrix, or perform Singular Value Decomposition. 3. Sort eigenvalues in descending order and choose the k eigenvectors that correspond to the k largest eigenvalues where k is the number of dimensions of the new feature subspace (k≤d). 4. Construct the projection matrix W from the selected k eigenvectors. 5. Transform the original dataset X via W to obtain a k-dimensional feature subspace Y.

Limitations of PCA

While PCA is a powerful technique for data analysis and interpretation, it does have its limitations. These include:

- PCA assumes that the principal components are orthogonal. - PCA does not work well when the data is not linear. - PCA can be affected by scale, and so a normalization step is required before applying PCA. - PCA is not robust to outliers.

See Also

- Linear Discriminant Analysis - Singular Value Decomposition - Factor Analysis