The Mathematics of Machine Learning Algorithms

Introduction

Machine learning (ML) is a rapidly growing field of computer science that focuses on the development of algorithms that allow computers to learn from and make decisions or predictions based on data. The mathematics of machine learning algorithms is a crucial aspect of this field, as it provides the foundation for understanding, developing, and improving these algorithms. This article delves into the mathematical principles that underpin machine learning algorithms, including probability theory, linear algebra, calculus, and optimization theory.

Probability Theory

Probability theory is a branch of mathematics concerned with probability, the analysis of random phenomena. It is fundamentally important in machine learning as it provides a framework for understanding and modeling uncertainty. Many machine learning algorithms, such as Naive Bayes classifiers, Hidden Markov models, and Gaussian mixture models, are based on probabilistic models.

Bayes' Theorem

At the heart of many machine learning algorithms is Bayes' theorem, a principle in probability theory that describes how to update the probability of a hypothesis based on evidence. It is used extensively in machine learning for tasks such as classification, regression, and anomaly detection.

Probability Distributions

Understanding different probability distributions, such as the Gaussian or normal distribution, binomial distribution, and Poisson distribution, is crucial in machine learning. These distributions are often used to model the underlying data in machine learning tasks.

Linear Algebra

Linear algebra is another fundamental area of mathematics in machine learning. It deals with vectors, vector spaces, linear transformations, and matrices. These concepts are used extensively in machine learning algorithms to represent data and perform computations.

Vectors and Matrices

Vectors and matrices are fundamental to many machine learning algorithms. For example, in Support Vector Machines (SVMs), data points are represented as vectors in a high-dimensional space, and the SVM algorithm finds the hyperplane that best separates different classes of data.

Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors are concepts in linear algebra that have important applications in machine learning, particularly in dimensionality reduction techniques such as Principal Component Analysis (PCA).

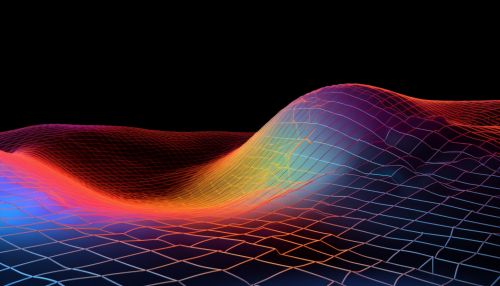

Calculus

Calculus, specifically differential calculus, plays a crucial role in machine learning. It is used to optimize machine learning algorithms and to understand how changes in the input affect the output.

Gradient Descent

Gradient descent is a first-order optimization algorithm that is widely used in machine learning for training models. It uses the concepts of derivative and gradient from calculus to iteratively adjust the model parameters to minimize a given function, typically a loss or cost function.

Backpropagation

Backpropagation, a method used in training neural networks, is another application of calculus in machine learning. It involves calculating the gradient of the loss function with respect to the weights of the network using the chain rule, a fundamental rule in calculus.

Optimization Theory

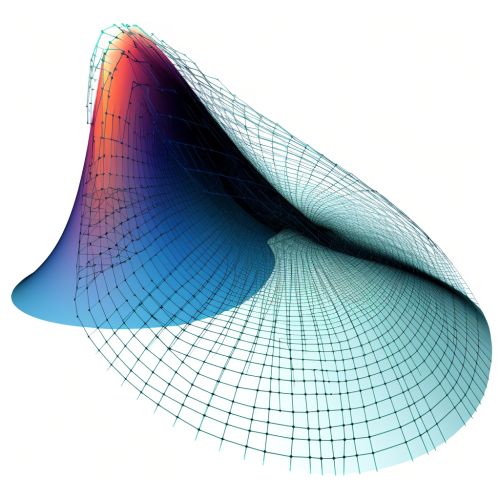

Optimization theory is a branch of mathematics that deals with finding the best solution from a set of feasible solutions. In the context of machine learning, optimization algorithms are used to adjust the parameters of a model to minimize the error or maximize the likelihood of the data.

Convex Optimization

Convex optimization is a subfield of optimization theory that focuses on convex problems, which have the property that the line segment between any two solutions in the feasible set is also in the feasible set. Many machine learning problems, such as linear regression and logistic regression, can be formulated as convex optimization problems.

Non-Convex Optimization

Non-convex optimization deals with problems that do not have this property, and are generally more difficult to solve. Despite this, many machine learning algorithms, such as neural networks, involve non-convex optimization.

Conclusion

The mathematics of machine learning is a vast and complex field, encompassing many areas of mathematics. Understanding these mathematical principles is crucial for developing and improving machine learning algorithms. While this article provides an overview of the main mathematical concepts used in machine learning, it is by no means exhaustive, and there are many other mathematical concepts and techniques that are also important in this field.