Linear algebra

Introduction

Linear algebra is a branch of mathematics that studies vectors, vector spaces (also called linear spaces), and linear transformations between vector spaces, such as rotating a shape, scaling it up or down, translating it (i.e., moving it), etc. It includes the study of lines, planes, and subspaces, but is also concerned with properties common to all vector spaces.

Basic Concepts

Vectors

In linear algebra, a vector is an element of a vector space. Vectors can be added together and multiplied (scaled) by numbers, called scalars in this context. Scalars are often taken to be real numbers, but there are also vector spaces with scalar multiplication by complex numbers, rational numbers, or generally any field.

Vector Spaces

A Vector space is a collection of vectors, which may be added together and multiplied by scalars. Every vector space has a zero vector, which when added to any vector yields the original vector. It also has additive inverses, so for every vector there is another vector that will add to it to yield the zero vector.

Linear Transformations

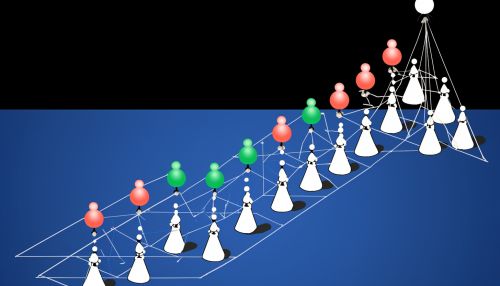

A Linear transformation is a mapping between two vector spaces that preserves the operations of addition and scalar multiplication. Linear transformations can be represented by matrices, and this leads to a much simpler theory, which is often described first.

Matrices and Matrix Theory

In linear algebra, a matrix is a rectangular array of numbers arranged in rows and columns. Matrices can be added and multiplied according to certain rules. They are used to represent linear transformations, which are mappings that preserve the operations of addition and scalar multiplication.

Matrix Operations

Matrix operations include addition, multiplication, and transposition, as well as the computation of the inverse and determinant of a matrix.

Systems of Linear Equations

A system of linear equations is a collection of equations, each of which is linear in the same set of variables. The Gaussian elimination method can be used to solve systems of linear equations. It involves a sequence of operations applied to the corresponding matrix of coefficients.

Eigenvalues and Eigenvectors

In linear algebra, an eigenvector of a linear transformation is a non-zero vector that changes by a scalar factor when that linear transformation is applied to it. The corresponding scalar is called an eigenvalue.

Applications of Linear Algebra

Linear algebra is used in almost all areas of mathematics, as well as in many areas of science and engineering. It is essential in the fields of computer graphics, statistics, quantum mechanics, electrical engineering, and many others.