Dynamic Bayesian Network

Introduction

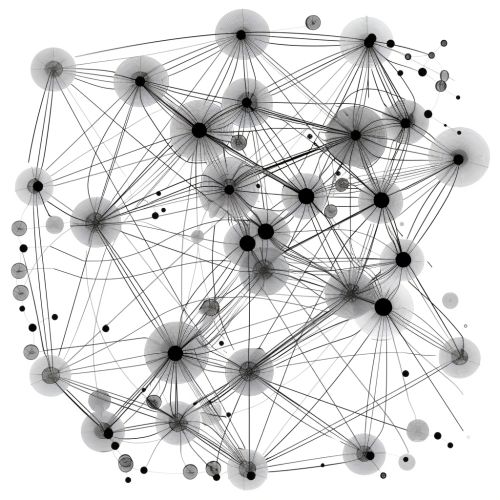

A Dynamic Bayesian Network (DBN) is an extension of a Bayesian Network (BN) that represents sequences of variables over time. DBNs are used to model temporal processes and are particularly useful in fields such as bioinformatics, speech recognition, robotics, and finance. They provide a structured way to represent the probabilistic relationships between variables and their evolution over time.

Structure and Components

A DBN consists of nodes and directed edges, similar to a standard Bayesian Network, but it includes additional temporal dimensions. The nodes represent random variables, and the edges denote conditional dependencies. The key components of a DBN include:

- **Temporal Nodes**: These represent variables at different time steps.

- **Transition Model**: This describes how variables evolve from one time step to the next.

- **Observation Model**: This specifies how observed data is generated from the hidden states.

Temporal Nodes

In a DBN, each variable is indexed by time, typically denoted as \(X_t\) for the variable \(X\) at time \(t\). The temporal nodes capture the state of the system at different time points, allowing for the modeling of dynamic processes.

Transition Model

The transition model defines the probabilistic dependencies between variables at consecutive time steps. It is often represented as \(P(X_t | X_{t-1})\), indicating the probability of \(X_t\) given \(X_{t-1}\). This model captures the dynamics of the system and is crucial for predicting future states based on past observations.

Observation Model

The observation model links the hidden states to the observed data. It is represented as \(P(Y_t | X_t)\), where \(Y_t\) is the observed variable at time \(t\) and \(X_t\) is the hidden state. This model allows for the incorporation of noisy or incomplete observations into the DBN framework.

Inference in DBNs

Inference in DBNs involves computing the probability distribution of the hidden states given the observed data. This process can be challenging due to the temporal dependencies and the potentially large state space. Common inference techniques include:

- **Exact Inference**: Methods such as the Forward-Backward Algorithm and Junction Tree Algorithm.

- **Approximate Inference**: Techniques like Particle Filtering and Variational Inference.

Forward-Backward Algorithm

The Forward-Backward Algorithm is a dynamic programming approach used for exact inference in DBNs. It consists of two main steps:

- **Forward Pass**: Computes the probability of the observed data up to time \(t\) given the hidden state at time \(t\).

- **Backward Pass**: Computes the probability of the future observations given the hidden state at time \(t\).

These probabilities are then combined to obtain the posterior distribution of the hidden states.

Particle Filtering

Particle Filtering is an approximate inference method that uses a set of particles to represent the posterior distribution. Each particle represents a possible state of the system, and the algorithm updates the particles based on the observed data. This method is particularly useful for high-dimensional or non-linear systems where exact inference is computationally infeasible.

Learning in DBNs

Learning in DBNs involves estimating the parameters of the transition and observation models from data. This can be done using:

- **Maximum Likelihood Estimation (MLE)**: Estimates parameters that maximize the likelihood of the observed data.

- **Expectation-Maximization (EM) Algorithm**: An iterative method that alternates between estimating the hidden states and optimizing the model parameters.

Maximum Likelihood Estimation

In MLE, the goal is to find the parameters \(\theta\) that maximize the likelihood function \(L(\theta)\). For DBNs, this involves computing the likelihood of the observed data given the model parameters and finding the parameter values that maximize this likelihood.

Expectation-Maximization Algorithm

The EM Algorithm is particularly useful for DBNs with hidden variables. It consists of two main steps:

- **Expectation Step (E-step)**: Estimates the expected value of the hidden states given the current parameters.

- **Maximization Step (M-step)**: Optimizes the model parameters based on the expected values computed in the E-step.

This iterative process continues until convergence, resulting in parameter estimates that are locally optimal.

Applications of DBNs

DBNs have a wide range of applications across various domains. Some notable examples include:

- **Bioinformatics**: Modeling gene regulatory networks and protein interactions.

- **Speech Recognition**: Capturing the temporal dependencies in speech signals.

- **Robotics**: Navigating and planning in dynamic environments.

- **Finance**: Predicting stock prices and market trends.

Bioinformatics

In bioinformatics, DBNs are used to model complex biological processes such as gene expression and protein interactions. By capturing the temporal dynamics of these processes, DBNs enable researchers to understand the underlying mechanisms and make predictions about future states.

Speech Recognition

DBNs are widely used in speech recognition systems to model the temporal dependencies in speech signals. They allow for the incorporation of acoustic features and language models, improving the accuracy of speech recognition algorithms.

Robotics

In robotics, DBNs are used for tasks such as navigation and planning in dynamic environments. By modeling the temporal evolution of the robot's state and the environment, DBNs enable robots to make informed decisions and adapt to changing conditions.

Advantages and Limitations

DBNs offer several advantages, including the ability to model complex temporal dependencies and incorporate noisy observations. However, they also have limitations, such as the computational complexity of inference and learning.

Advantages

- **Flexibility**: DBNs can model a wide range of temporal processes.

- **Incorporation of Uncertainty**: DBNs explicitly represent uncertainty in the model.

- **Scalability**: DBNs can handle large datasets and complex models.

Limitations

- **Computational Complexity**: Inference and learning in DBNs can be computationally intensive.

- **Model Specification**: Defining the structure and parameters of the DBN can be challenging.

- **Approximation Errors**: Approximate inference methods may introduce errors.

Conclusion

Dynamic Bayesian Networks are powerful tools for modeling temporal processes in various domains. They provide a structured framework for representing the probabilistic relationships between variables and their evolution over time. Despite their computational challenges, DBNs offer significant advantages in terms of flexibility, scalability, and the ability to incorporate uncertainty.