Word2Vec

Introduction

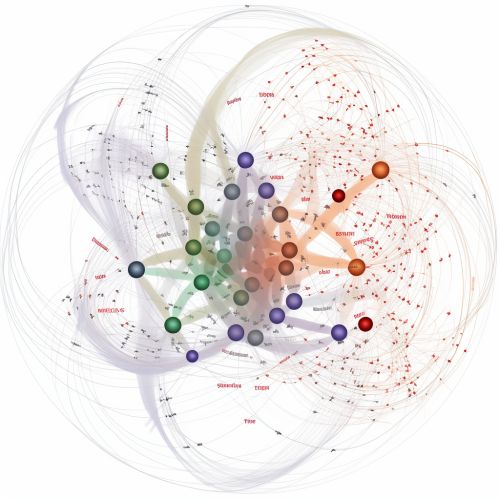

Word2Vec is a group of related models that are used to produce word embeddings. These models are two-layer neural nets that are trained to reconstruct linguistic contexts of words. Word2Vec takes as its input a large corpus of text and produces a vector space, typically of several hundred dimensions, with each unique word in the corpus being assigned a corresponding vector in the space. Word vectors are positioned in the vector space such that words that share common contexts in the corpus are located in close proximity to one another in the space.

Background

Word2Vec was created by a team of researchers led by Tomas Mikolov at Google. The algorithm has been widely used in various natural language processing (NLP) tasks, such as sentiment analysis, named entity recognition, machine translation, and information retrieval. Its ability to capture semantic and syntactic relationships between words has made it a valuable tool in the field of computational linguistics.

Working Principle

Word2Vec uses a shallow neural network model to learn word associations from a text corpus. The underlying assumption of Word2Vec is that two words sharing similar contexts also share a similar meaning and consequently a similar vector representation from the model. For instance, "dog", "puppy" and "pup" are often used in similar situations, with similar surrounding words like "bark", "fur", and "pet", and according to Word2Vec they will therefore share a similar vector representation.

From this assumption, Word2Vec can be used to find out the vectors of a word by aggregating the vectors of its context. Word2Vec uses two architectures for this purpose: Continuous Bag of Words (CBOW) and Skip-Gram.

Continuous Bag of Words (CBOW)

In the CBOW model, the distributed representations of context (or surrounding words) are combined to predict the word in the middle. While the order of context words does not influence prediction, the architecture takes advantage of the context from both sides of a word, using the surrounding words as input to predict the target word.

Skip-Gram

The Skip-Gram model architecture is the opposite of the CBOW. It predicts the surrounding words given a center word. With Skip-Gram, the distributed representation of the input word is used to predict the context.

Applications of Word2Vec

Word2Vec has been used in many NLP tasks, including sentiment analysis, named entity recognition, and information retrieval. It can also be used to compute semantic similarity between words by measuring the cosine of the angle between the word vectors. This allows the model to identify words that are semantically closest to the given word, which can be useful in various applications such as word analogy tasks, machine translation, and even in search engines.

Limitations and Criticisms

Despite its success, Word2Vec has been criticized for its inability to capture polysemy—that is, the capacity for a word to have multiple meanings. For example, the word "bank" can mean the edge of a river or a financial institution, but Word2Vec would produce only one vector representation for "bank", making it difficult to distinguish its meaning based on the context.

Another limitation is that Word2Vec treats words as atomic units and generates vectors for words in isolation, so the model does not consider morphological information. For instance, the words "run", "runs", "ran", and "running" are all treated separately, even though they are forms of the same verb.