VGG16

Introduction

The VGG16 is a convolutional neural network model proposed by K. Simonyan and A. Zisserman from the University of Oxford in the paper "Very Deep Convolutional Networks for Large-Scale Image Recognition". The model achieves 92.7% top-5 test accuracy in ImageNet, which is a dataset of over 14 million images belonging to 1000 classes. VGG16 was trained for weeks and was using NVIDIA Titan Black GPUs.

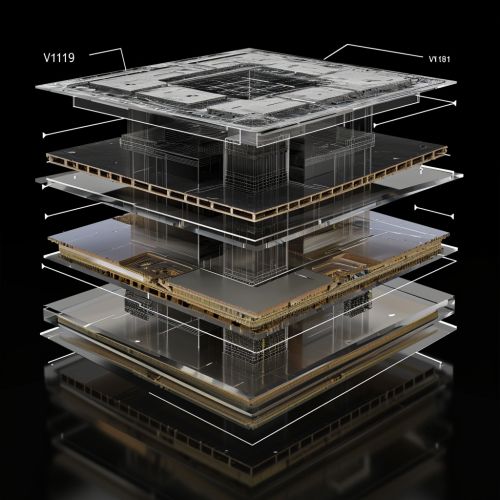

Architecture

The architecture of VGG16 is characterized by its simplicity, using only 3x3 convolutional layers stacked on top of each other in increasing depth. Reducing volume size is handled by max pooling. Two fully-connected layers, each with 4096 nodes are then followed by a softmax classifier.

Layers

The VGG16 network consists of 16 layers in total. It includes 13 convolutional layers, followed by 3 fully connected layers. The convolutional layers are designed to recognize patterns in the image data, while the fully connected layers classify the recognized patterns.

Convolutional Layers

The convolutional layers in VGG16 are designed to automatically and adaptively learn spatial hierarchies of features. The input to each is a fixed-size 224 x 224 RGB image. The image is passed through a stack of convolutional (conv.) layers, where filters were used with a very small receptive field: 3x3 (which is the smallest size to capture the notion of left/right, up/down, center).

Fully Connected Layers

The fully connected layers follow the convolutional layers. They have 4096 channels each. The fully connected layers are the final stages of the network, where high-level reasoning in the neural network occurs. The fully connected layers can be seen as a traditional multi-layer perceptron (MLP) with a ReLU activation function.

Training

The training of the VGG16 involves the optimization of the loss function. The loss function used in the VGG16 is the Softmax loss function. The optimization of the loss function is done using the Stochastic Gradient Descent (SGD) algorithm. The learning rate is initially set to 0.01 and is decreased by a factor of 10 after every 30 epochs.

Applications

VGG16 is used in many deep learning image classification problems. It can be used to both classify images into 1000 classes and also extract features from images. It is also used in transfer learning, where the learned weights of the pre-trained VGG16 network are used as an initialization for another network.

Advantages and Disadvantages

The main advantage of the VGG16 is that it is simple to implement and understand due to its uniform architecture. It also has a very good performance on the ImageNet dataset. However, the VGG16 is very memory intensive and requires a lot of computational power. It also does not perform as well as some of the more recent architectures like ResNet or Inception.