The Mathematics Behind Artificial Neural Networks

Introduction

Artificial Neural Networks (ANNs) are a cornerstone of modern AI and ML, mimicking the biological neural networks that constitute animal brains. They are systems of interconnected nodes, or "neurons," which process information using dynamic state responses to external inputs. The mathematics behind these networks is a fascinating blend of linear algebra, calculus, and statistics, providing the foundation for their design and functionality.

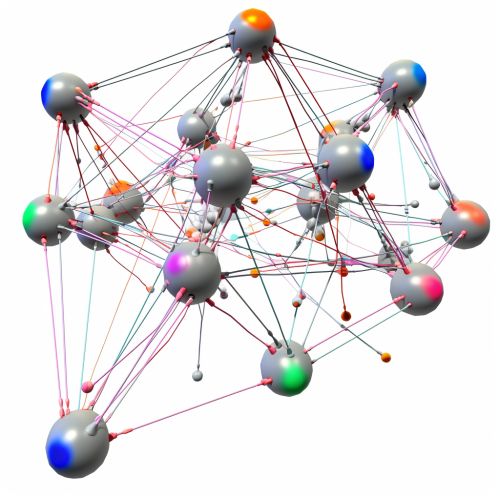

The Basic Structure of an Artificial Neural Network

An ANN is composed of layers of nodes, or "neurons," each of which performs a simple computation on its inputs. These layers include an input layer, one or more hidden layers, and an output layer. Each node in a layer is connected to every node in the adjacent layers, and these connections, or "weights," are the primary means by which the network learns.

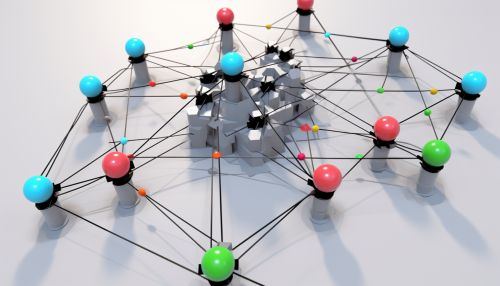

Nodes and Weights

Each node in an ANN receives a set of inputs, multiplies each input by its corresponding weight, and then applies a activation function to the sum of these products to produce its output. This process can be represented mathematically by the equation:

y = f(Σ(w_i * x_i))

where y is the output, f is the activation function, w_i are the weights, and x_i are the inputs.

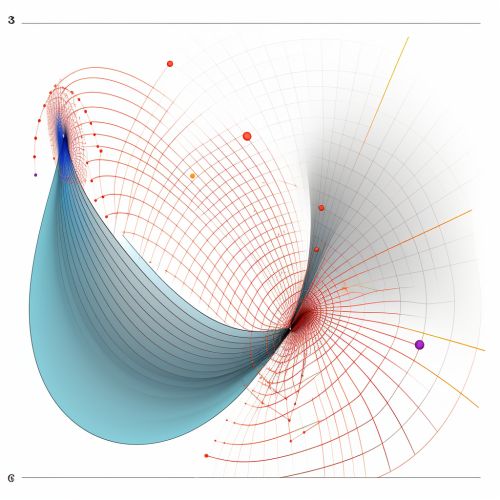

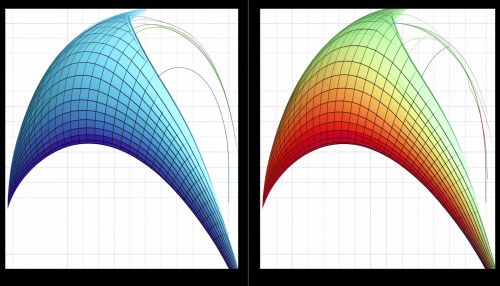

Activation Functions

Activation functions are a critical component of ANNs, determining the output of a node given its inputs. They introduce non-linearity into the model, allowing the network to learn from errors and adjust its weights accordingly. There are several types of activation functions used in ANNs, including the sigmoid function, the hyperbolic tangent function, and the rectified linear unit (ReLU) function.

Sigmoid Function

The sigmoid function, also known as the logistic function, is defined as:

f(x) = 1 / (1 + e^-x)

This function maps any input to a value between 0 and 1, making it useful for binary classification problems.

Hyperbolic Tangent Function

The hyperbolic tangent function, or tanh function, is defined as:

f(x) = (e^x - e^-x) / (e^x + e^-x)

Like the sigmoid function, the tanh function also maps its inputs to a value between -1 and 1, providing a larger range than the sigmoid function.

Rectified Linear Unit Function

The Rectified Linear Unit (ReLU) function is defined as:

f(x) = max(0, x)

This function maps any negative input to 0 and leaves positive inputs unchanged, making it computationally efficient and widely used in deep learning models.

Learning in Artificial Neural Networks

Learning in ANNs is achieved through a process called backpropagation, which involves adjusting the weights of the network in response to the error in its output. This process is guided by a loss function, which quantifies the difference between the network's predicted output and the actual output.

Backpropagation

Backpropagation is a method used in ANNs to calculate the gradient of the loss function with respect to the weights. This gradient is then used to update the weights in a direction that minimizes the loss. The process involves two passes through the network: a forward pass to compute the output and error, and a backward pass to compute the gradient and update the weights.

Loss Functions

Loss functions measure the discrepancy between the predicted output of the network and the actual output. Common loss functions used in ANNs include mean squared error (MSE) for regression problems and cross-entropy for classification problems.

Conclusion

The mathematics behind artificial neural networks is a complex and fascinating field, combining elements of linear algebra, calculus, and statistics to create systems capable of learning and adapting. Understanding these mathematical principles is crucial to the design and implementation of effective ANNs, and continues to be an active area of research in the field of artificial intelligence.