Speech Processing

Introduction

Speech processing is a subfield of signal processing and linguistics which focuses on the interpretation and generation of spoken language by machines. It involves studying the linguistic, phonetic, and acoustic aspects of speech signals to develop algorithms and systems for speech recognition, speech synthesis, speech enhancement, voice conversion, and speaker recognition.

History and Evolution

The field of speech processing has evolved significantly since its inception in the mid-20th century. Early research in the field was primarily focused on speech recognition, with the first speech recognition systems being developed in the 1950s. These early systems were capable of recognizing only a limited set of spoken words and phrases, and their performance was significantly affected by the speaker's accent and speaking style.

In the 1970s and 1980s, advances in digital signal processing and computational power led to the development of more sophisticated speech recognition systems. These systems used statistical methods and machine learning algorithms to recognize a wider range of spoken words and phrases, and they were less affected by the speaker's accent and speaking style.

In the 1990s and 2000s, the focus of speech processing research shifted towards speech synthesis and voice conversion. These technologies aim to generate human-like speech from text or to convert one speaker's voice into another. These advancements have led to the development of numerous applications, including text-to-speech systems, voice assistants, and voice changers.

Today, speech processing is a multidisciplinary field that combines elements of linguistics, computer science, electrical engineering, and mathematics. It continues to evolve, with current research focusing on areas such as deep learning for speech recognition, emotion recognition from speech, and speech enhancement in noisy environments.

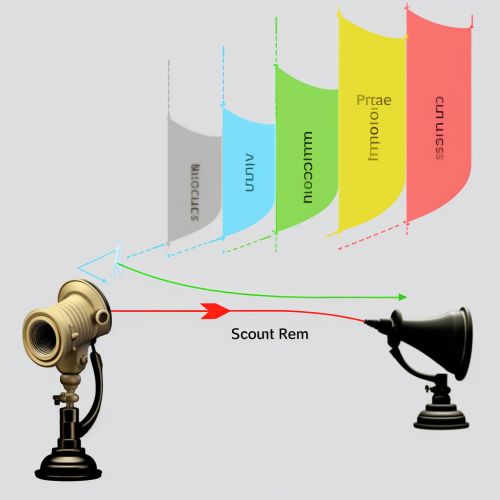

Speech Recognition

Speech recognition is the technology that enables machines to convert spoken language into written text. It is one of the most widely studied and applied areas of speech processing, with applications ranging from voice-controlled virtual assistants to transcription services.

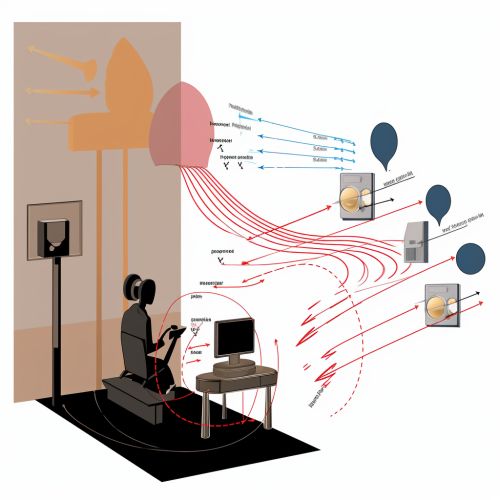

Speech recognition systems typically involve several stages, including feature extraction, acoustic modeling, language modeling, and decoding. Feature extraction involves converting the raw speech signal into a set of features that represent the acoustic properties of the speech. Acoustic modeling involves mapping these features to phonetic units, such as phonemes or words. Language modeling involves predicting the probability of a sequence of words, and decoding involves finding the most likely sequence of words given the acoustic and language models.

Speech recognition technology has improved significantly over the years, thanks to advances in machine learning and deep learning. Modern speech recognition systems, such as those used by Google, Apple, and Amazon, are capable of recognizing a wide range of accents and dialects, and they can handle complex sentences and large vocabularies.

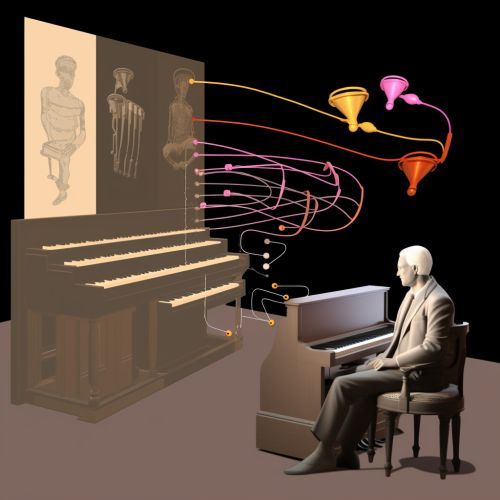

Speech Synthesis

Speech synthesis, also known as text-to-speech (TTS), is the process of generating spoken language from written text. It is used in a variety of applications, including reading aids for the visually impaired, voice announcements in public transport systems, and voice assistants.

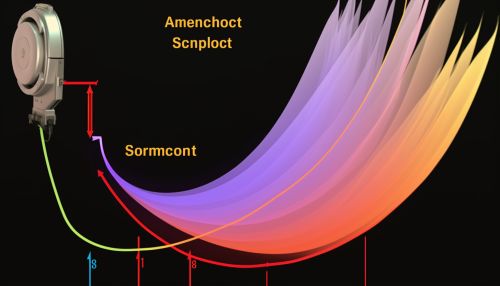

A typical speech synthesis system involves several stages, including text analysis, prosody prediction, and speech generation. Text analysis involves parsing the input text and converting it into a sequence of phonetic units. Prosody prediction involves determining the rhythm, stress, and intonation of the speech. Speech generation involves generating the speech signal from the phonetic and prosodic information.

Modern speech synthesis systems use machine learning and deep learning techniques to generate high-quality, natural-sounding speech. These systems can mimic the prosody and voice quality of human speakers, and they can generate speech in a variety of voices and languages.

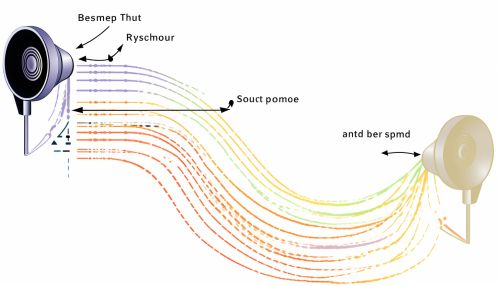

Speech Enhancement

Speech enhancement refers to the process of improving the quality of speech signals in noisy environments. It is an important area of speech processing, with applications in telecommunications, hearing aids, and voice-controlled systems.

Speech enhancement techniques aim to reduce background noise, echo, and reverberation in speech signals, while preserving the quality and intelligibility of the speech. These techniques include spectral subtraction, noise estimation, and adaptive filtering.

Modern speech enhancement systems use machine learning and deep learning techniques to improve the quality of speech signals. These systems can adapt to different noise conditions and can enhance speech signals in real-time.

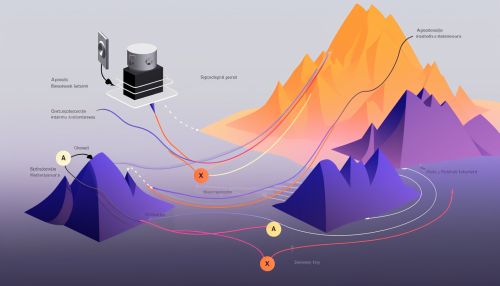

Voice Conversion

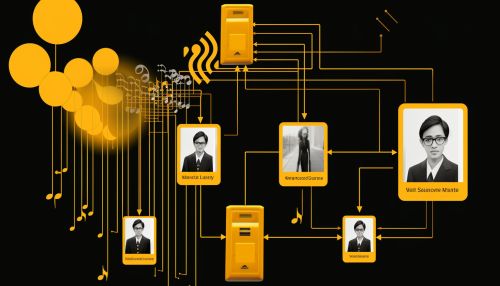

Voice conversion is the process of transforming one speaker's voice into another while preserving the linguistic content. It is a relatively new area of speech processing, with potential applications in voice disguise, speaker identification, and personalized text-to-speech systems.

Voice conversion systems typically involve several stages, including feature extraction, mapping, and speech synthesis. Feature extraction involves extracting the acoustic features of the source and target voices. Mapping involves finding a transformation that maps the features of the source voice to the features of the target voice. Speech synthesis involves generating the converted speech from the mapped features.

Modern voice conversion systems use machine learning and deep learning techniques to achieve high-quality voice conversion. These systems can convert voices across genders, ages, and languages, and they can mimic the voice characteristics of specific individuals.

Speaker Recognition

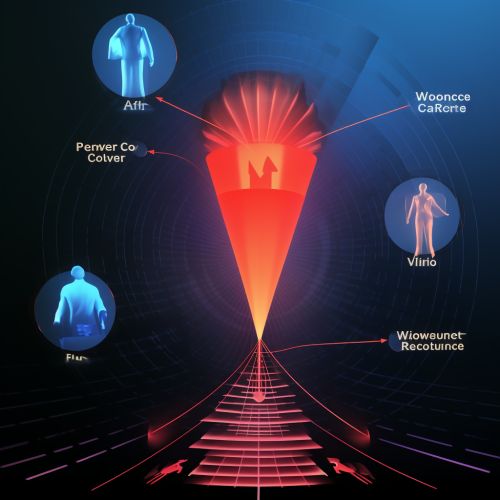

Speaker recognition is the technology that enables machines to recognize individuals based on their voice. It is used in a variety of applications, including biometric authentication, voice-controlled systems, and forensic investigations.

Speaker recognition systems typically involve two stages: feature extraction and matching. Feature extraction involves extracting the acoustic features of the speech that are unique to the speaker. Matching involves comparing these features to a database of known speakers to find the best match.

Modern speaker recognition systems use machine learning and deep learning techniques to achieve high accuracy and robustness. These systems can recognize speakers in different languages and accents, and they can handle variations in speech due to illness, aging, and emotional state.

Future Directions

The field of speech processing continues to evolve, with current research focusing on areas such as deep learning for speech recognition, emotion recognition from speech, and speech enhancement in noisy environments. Future advancements in these areas could lead to more accurate and robust speech processing systems, and new applications in areas such as healthcare, entertainment, and security.