Optimal Control Theory

Introduction

Optimal control theory is a branch of mathematical optimization that deals with finding a control for a dynamical system over a period of time such that an objective function is optimized. It has numerous applications in both science and engineering. For example, the method is used in economics to maximize profit, in navigation to minimize the risk of collision, and in machine learning to improve the efficiency of algorithms.

History and Development

The development of optimal control theory began in the late 1950s and early 1960s. The field was primarily motivated by issues in aerospace engineering and the need to optimize the trajectories of rockets. The seminal work in the field is considered to be the 1962 book "Calculus of Variations and Optimal Control Theory" by Richard E. Bellman and his colleagues.

Mathematical Formulation

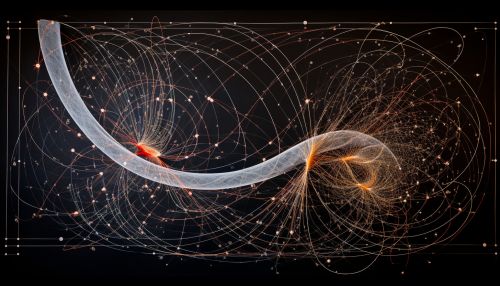

The mathematical formulation of optimal control theory involves the calculus of variations, differential equations, and numerical methods. The objective is to find a control function that will optimize a certain performance measure while satisfying certain constraints. The constraints usually come in the form of differential equations that describe the dynamics of the system.

The problem can be formulated in the following way:

Given a dynamical system described by the differential equation

- dx/dt = f(x, u, t)

where x is the state of the system, u is the control, and t is time, and given an objective function

- J = ∫ L(x, u, t) dt

where L is the Lagrangian of the system, the problem is to find the control u(t) that minimizes (or maximizes) the objective function J subject to the differential equation.

Solution Methods

There are several methods to solve optimal control problems. The most common one is the Pontryagin's minimum principle, which provides necessary conditions for optimality. Another method is the Hamilton–Jacobi–Bellman equation, which provides a sufficient condition for optimality. Numerical methods are often used to solve these equations.

Applications

Optimal control theory has a wide range of applications in various fields. In economics, it is used to maximize profit or minimize cost. In engineering, it is used to optimize the performance of systems. In computer science, it is used in machine learning algorithms to improve their efficiency. In biology, it is used to model the behavior of biological systems. In medicine, it is used to optimize treatment strategies.