Newton's method

Introduction

Newton's method is a root-finding algorithm that produces successively better approximations to the roots (or zeroes) of a real-valued function. Named after Isaac Newton, the method is also known as the Newton-Raphson method, after Joseph Raphson who independently developed a similar method.

Mathematical Formulation

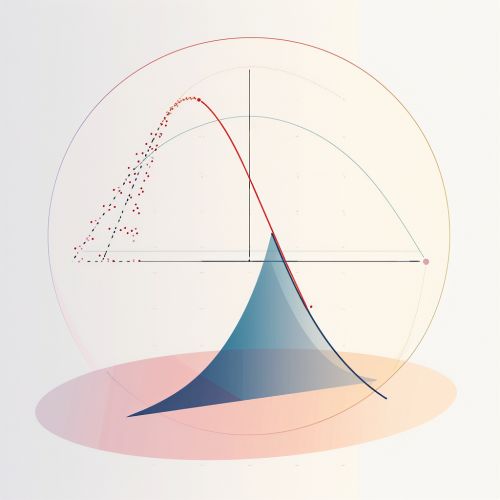

The Newton-Raphson method is based on the simple idea of linear approximation. The method starts with a function f defined over the real numbers x, an initial guess x0 for a root of f, and the derivative f' of f. If the function satisfies the assumptions made in the derivation of the formula and the initial guess is close, then a better approximation x1 is

- x1 = x0 - f(x0)/f'(x0)

The process is repeated as

- xn+1 = xn - f(xn)/f'(xn)

until a sufficiently accurate value is reached.

Convergence

The convergence of Newton's method is quadratic, meaning it generally converges on the root very quickly, provided the initial guess is close enough to the root. However, there are several situations where the Newton-Raphson method fails to converge to the root, or converges slowly. These include cases where the derivative is zero, or the function is not differentiable.

Applications

Newton's method has wide applications in numerical computing. It is used in optimization to find the maximum or minimum of functions. It is also used in machine learning algorithms for minimizing cost functions, in computer graphics, and in solving systems of non-linear equations.

Limitations and Alternatives

While Newton's method is powerful, it has limitations. It might not converge if the initial guess is not close to the true root. It also requires the function to be differentiable. When these conditions are not met, other methods such as the bisection method, the secant method, or fixed-point iteration may be more appropriate.