Mish activation function

Introduction

The Mish activation function is a mathematical function used in the field of artificial neural networks. It was proposed by Diganta Misra in 2019 as a novel, self-regularized, non-monotonic neural activation function. It has been shown to outperform several other activation functions in terms of accuracy and loss metrics in various deep learning tasks.

Mathematical Definition

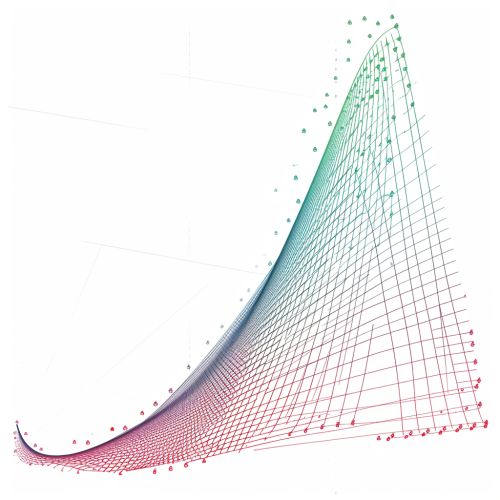

The Mish activation function is defined mathematically as:

- Mish(x) = x * tanh(softplus(x))

where softplus(x) is another activation function defined as log(1 + e^x). The Mish function is therefore a product of the input value x and the hyperbolic tangent of the softplus of x.

Properties

The Mish activation function possesses several properties that make it suitable for use in artificial neural networks. These include:

- Non-monotonicity: Unlike many other activation functions, Mish is a non-monotonic function. This means that its output does not strictly increase or decrease with the input. This property allows Mish to model more complex relationships in the data.

- Boundedness: The output of the Mish function is bounded below by -0.308 but is unbounded above. This property helps prevent the problem of exploding gradients, a common issue in training deep neural networks.

- Continuity and Differentiability: Mish is a continuous and differentiable function. This property is crucial for the backpropagation algorithm used to train neural networks.

- Self-Regularization: Mish has the property of self-regularization, meaning it can reduce the risk of overfitting without the need for additional regularization techniques.

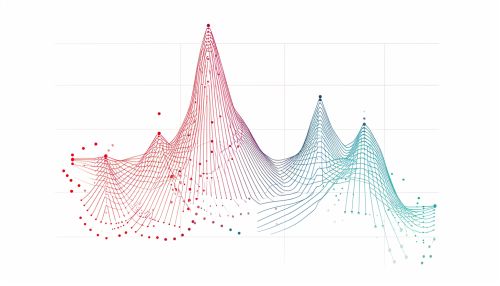

Comparison with Other Activation Functions

The Mish activation function has been compared with several other activation functions used in artificial neural networks, including ReLU, Leaky ReLU, ELU, SELU, and Swish. In many cases, Mish has been shown to outperform these functions in terms of accuracy and loss metrics.

Applications

The Mish activation function has been used in a variety of deep learning tasks, including image classification, object detection, and natural language processing. Its properties make it particularly suited to tasks that require modeling complex relationships in the data.