Markov chains

Introduction

A Markov chain is a mathematical model that transitions from one state to another within a finite or countable number of possible states. It is a type of stochastic process characterized by the Markov property. This property asserts that the probability of transitioning to any particular state depends solely on the current state and time elapsed, and not on the sequence of states that preceded it. This unique type of "memorylessness" is referred to as the Markov property.

Historical Background

The concept of Markov chains was first introduced by Russian mathematician Andrey Markov in 1906. Markov's development of this concept was driven by a disagreement with another mathematician, Pafnuty Chebyshev, who asserted that the Law of Large Numbers could only be applied to independent events. Markov utilized his chains to demonstrate that this was not the case, and that the law could also apply to events that were dependent on each other.

Defining Markov Chains

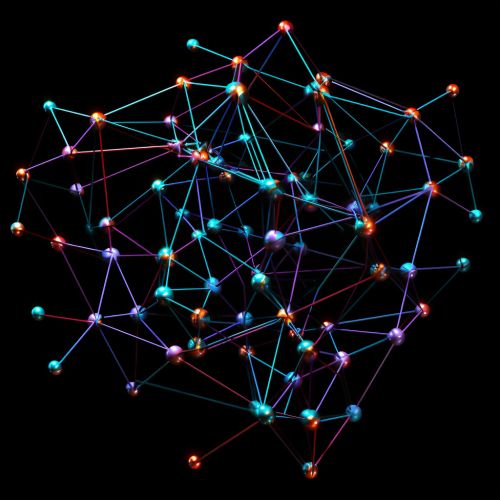

A Markov chain is defined by a collection of random variables, each of which represents an individual state in the system. These variables are indexed by a set, which can be either finite or countable. The Markov property, a key characteristic of Markov chains, states that the probability of moving to the next state depends only on the current state and not on the past states.

Transition Matrix

The transitions between states in a Markov chain are represented by a transition matrix. The entries in this matrix represent the probabilities of moving from one state to another. For a Markov chain with n states, the transition matrix will be an n x n matrix, where each entry a_ij represents the probability of transitioning from state i to state j.

Classification of States

In a Markov chain, states can be classified in various ways. A state is said to be 'accessible' from another state if there exists a sequence of steps that leads from the first state to the second. If every state in a Markov chain is accessible from every other state, the chain is said to be 'irreducible'.

Stationary Distribution

A Markov chain has a stationary distribution if there exists a probability distribution that remains unchanged in the Markov chain as time progresses. This distribution is also known as the equilibrium or invariant distribution.

Applications of Markov Chains

Markov chains have broad applications in various fields such as physics, chemistry, economics, and computer science. They are used to model systems that follow a chain of linked events, where each event depends only on the state achieved in the previous event.