Markov

Introduction

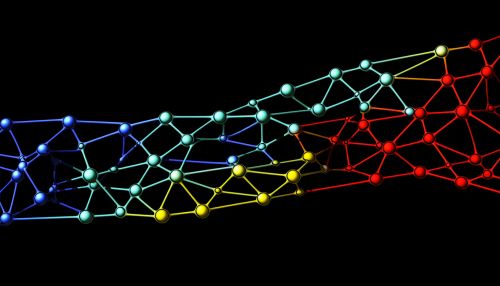

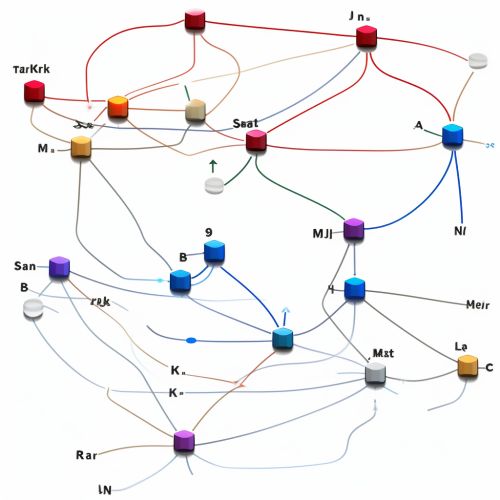

A Markov process is a mathematical model that undergoes transitions from one state to another, within a finite or countable number of possible states. It is a random process usually characterized as memoryless: the next state depends only on the current state and not on the sequence of events that preceded it. This specific kind of "memorylessness" is called the Markov property.

History

The Markov chain is named after the Russian mathematician Andrey Andreyevich Markov, who first introduced the concept in his research on stochastic processes, a branch of mathematics that deals with systems that evolve over time in a way that is not entirely predictable. Markov's initial study was on the occurrence of letters in the Russian language, where he used the chain to model and predict letter sequences.

Definition

A Markov chain is a sequence of random variables X1, X2, X3, ..., with the Markov property, namely that the probability of moving to the next state depends only on the current state and time elapsed, and not on the sequence of states that preceded it.

Properties

Markov chains have many properties that make them a useful tool in many fields. Some of these properties include:

1. **Transience and Recurrence**: A state in a Markov chain is transient if, once left, it cannot be returned to. Conversely, a state is recurrent if it can be returned to.

2. **Periodicity**: A state in a Markov chain is periodic if the chain can return to the state only at multiples of some integer larger than 1.

3. **Ergodicity**: A state in a Markov chain is ergodic if it is aperiodic and positive recurrent. In other words, it is possible to return to the state, and the expected return time is finite.

Applications

Markov chains have many applications in various fields. They are used in physics to model particle exchanges, in chemistry to model chain reactions, in economics to model stock market prices, and in computer science to model data structures and algorithms, among many other uses.