Kubernetes

Introduction

Kubernetes (commonly stylized as K8s) is an open-source container orchestration system for automating application deployment, scaling, and management. It was originally designed by Google and is now maintained by the Cloud Native Computing Foundation. It aims to provide a platform for automating deployment, scaling, and operations of application containers across clusters of hosts.

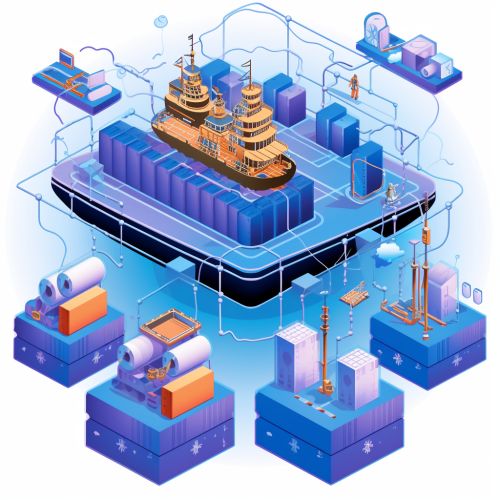

Architecture

Kubernetes follows a client-server architecture. It's possible to have a multi-master setup (for high availability), but by default there is a single master server which acts as the control plane and multiple nodes that host the applications.

Master Node

The master node is responsible for maintaining the desired state of the cluster, such as which applications or services are running and which nodes they run on. It serves as the main control unit for the cluster, managing its workload and directing communication across the system. The master node communicates with nodes through the Kubernetes API Server, which is the front-end for the Kubernetes control plane.

The components of a master node include:

- Kubernetes API Server: It is the front-end of the control plane and exposes the Kubernetes API. It is designed to scale horizontally, i.e., it scales by deploying more instances.

- etcd: Consistent and highly-available key value store used as Kubernetes' backing store for all cluster data.

- Scheduler: It is responsible for distributing work or containers across multiple nodes. It looks for newly created Pods with no assigned node, and selects a node for them to run on.

- Controller Manager: It is a daemon that runs in the background and regulates the Kubernetes cluster, managing different non-terminating control loops.

Worker Node

Worker nodes are the servers where the applications actually run. Each worker node runs a Kubelet, which is an agent for managing the node and communicating with the Kubernetes master. Worker nodes also run the container runtime, like Docker or rkt, and other services necessary to support the containers.

The components of a worker node include:

- Kubelet: An agent that runs on each node in the cluster. It makes sure that containers are running in a pod.

- Kube-proxy: It is a network proxy that runs on each node in the cluster, maintaining network rules and enabling network communication to your Pods from network sessions inside or outside of your cluster.

- Container Runtime: Software responsible for running containers. Kubernetes supports several runtimes: Docker, containerd, cri-o, rktlet and any implementation of the Kubernetes CRI (Container Runtime Interface).

Key Features

Kubernetes provides a number of key features, including multiple storage APIs, container health checks, manual or automatic scaling, rolling updates and service discovery. It also provides the primitives necessary to deploy and scale complex applications, including stateful and stateless services, batch jobs, and more.

Automatic Binpacking

Kubernetes automatically schedules containers based on resource needs and constraints, to ensure workloads are not starved of resources and maintain high availability.

Service Discovery and Load Balancing

Kubernetes can expose a container using the DNS name or their own IP address. If traffic to a container is high, Kubernetes is able to load balance and distribute the network traffic to ensure the deployment is stable.

Storage Orchestration

Kubernetes allows you to automatically mount a storage system of your choice, such as local storages, public cloud providers, and more.

Self-Healing

Kubernetes can automatically replace and reschedule containers which die, kill containers that don’t respond to your user-defined health check, and doesn't advertise them to clients until they are ready to serve.

Secret and Configuration Management

Kubernetes lets you store and manage sensitive information, such as passwords, OAuth tokens, and ssh keys. You can deploy and update secrets and application configuration without rebuilding your container images, and without exposing secrets in your stack configuration.

Use Cases

Kubernetes can be used in a variety of environments and scenarios. Here are some of the most common use cases:

- Microservices: Kubernetes is often used to manage, deploy and scale microservices. Microservices can be individually containerized, allowing each to be scaled independently.

- Machine Learning: Kubernetes can be used to manage and scale machine learning workflows, including data collection, model training, and serving predictions.

- Batch Processing: Kubernetes can manage batch and CI workloads, replacing containers that fail, if desired.

- Application Deployment: Kubernetes can help to ensure that application deployments are predictable, scalable, and can be automatically managed, reducing the overall complexity.