History of Computers

Early History

The history of computers begins with the development of simple devices to aid in calculations. The abacus, a device that was used in ancient times to perform arithmetic, is considered one of the earliest forms of a computing device. The abacus was widely used in various civilizations, including those of China, Greece, and Rome.

In the 17th century, the development of mechanical calculators marked a significant milestone in the history of computing. The Pascaline, invented by Blaise Pascal, was capable of performing addition and subtraction. Gottfried Wilhelm Leibniz later improved upon this design with the Stepped Reckoner, which could also perform multiplication and division.

The Birth of Modern Computing

The 19th century saw the conceptualization of the first programmable computer. Charles Babbage, an English mathematician and engineer, designed the Analytical Engine, a mechanical general-purpose computer. Although the Analytical Engine was never built, its design included features found in modern computers, such as conditional branching and loops.

The punched card system, developed by Herman Hollerith for the 1890 U.S. Census, was another significant development. This system used cards with holes punched in them to represent data, a concept that would be used in computer design for decades to come.

The Electronic Age

The 20th century ushered in the era of electronic computing. The Atanasoff-Berry Computer (ABC), developed by John Vincent Atanasoff and Clifford Berry at Iowa State University in the early 1940s, is considered the first electronic digital computer.

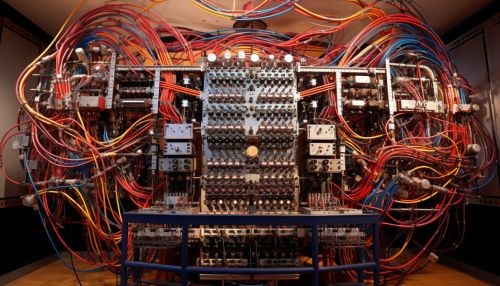

During World War II, the British developed the Colossus, an electronic computer used to break German ciphers. Around the same time, the Americans developed the Electronic Numerical Integrator and Computer (ENIAC), which was used for calculations in the development of the atomic bomb.

The Advent of Transistors and Microprocessors

The invention of the transistor in 1947 by William Shockley, John Bardeen, and Walter Brattain at Bell Labs marked a turning point in computer history. Transistors were smaller, faster, and more reliable than the vacuum tubes used in early computers. They paved the way for the development of smaller, more powerful computers.

The microprocessor, a single chip that could do the work of a room-sized computer, was invented in the 1970s. This led to the development of personal computers, which revolutionized the way people work, communicate, and entertain themselves.

The Personal Computer Revolution

The 1970s and 1980s saw the rise of personal computers. The Apple II, introduced in 1977, and the IBM Personal Computer, introduced in 1981, were among the first successful personal computers. These machines brought computing into homes and businesses around the world.

The development of user-friendly operating systems, such as Windows and MacOS, made computers accessible to non-technical users. The advent of the World Wide Web in the 1990s further increased the utility of personal computers.

The Mobile Computing Era

The 21st century has been marked by the rise of mobile computing. Smartphones, tablets, and laptops allow users to access information and perform tasks on the go. The smartphone, in particular, has become a ubiquitous device, with billions of people around the world using them for communication, entertainment, and work.

The development of cloud computing has also changed the way we use computers. Instead of storing data and running applications on local devices, many tasks are now performed in the cloud, allowing for greater flexibility and scalability.

Future of Computers

The future of computers is likely to be shaped by advances in areas such as quantum computing, artificial intelligence, and machine learning. Quantum computers, which use the principles of quantum mechanics to perform calculations, have the potential to solve problems that are currently beyond the reach of classical computers.

Artificial intelligence (AI) and machine learning are also playing an increasingly important role in computing. These technologies are being used to develop systems that can understand, learn, predict, adapt and potentially operate autonomously.