Hierarchical clustering

Introduction

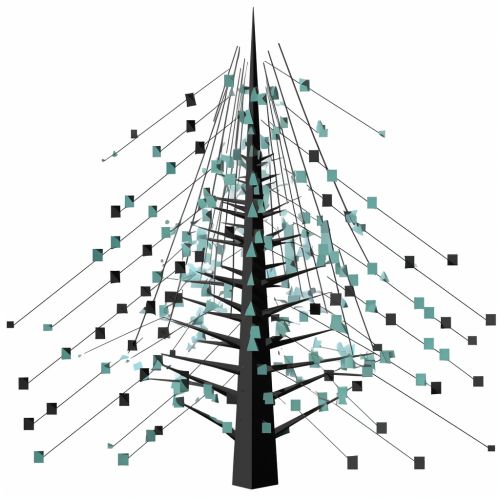

Hierarchical clustering is a method of cluster analysis that seeks to build a hierarchy of clusters. This technique is part of the broader field of data mining, which involves the extraction of useful information from large datasets. The goal of hierarchical clustering is to create a tree of clusters, known as a dendrogram, that organizes the data into a hierarchy based on their similarities or differences.

Overview

Hierarchical clustering begins by treating each data point as a single cluster. It then merges the two most similar clusters based on a certain similarity measure such as Euclidean distance or correlation. This process is repeated iteratively until all data points are merged into a single cluster. The result is a tree-like diagram called a dendrogram, which shows the hierarchical relationship between the clusters.

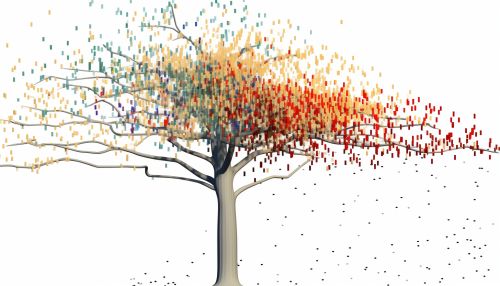

There are two main types of hierarchical clustering: agglomerative and divisive. Agglomerative clustering is a "bottom-up" approach where clustering starts with each element as a separate cluster and merges them into successively larger clusters. On the other hand, divisive clustering is a "top-down" approach where all observations start in one cluster, and splits are performed recursively as one moves down the hierarchy.

Agglomerative Clustering

In agglomerative clustering, each data point starts in its own cluster. These clusters are then combined into larger clusters based on the similarity of their data points. The process continues until all data points are in a single cluster. The result is a dendrogram that shows the sequence of merges and the similarity levels at which they occurred.

The key to agglomerative clustering is the measure of similarity. There are several common measures, including:

- Euclidean distance: This is the straight-line distance between two points in a space of any number of dimensions. It is perhaps the most intuitive measure of distance.

- Manhattan distance: This is the sum of the absolute differences of their coordinates. It is less sensitive to outliers than Euclidean distance.

- Cosine similarity: This measures the cosine of the angle between two vectors. It is often used in text mining because it can handle high-dimensional data.

Divisive Clustering

Divisive clustering takes the opposite approach to agglomerative clustering. It starts with all data points in a single cluster. The cluster is then split into smaller clusters based on dissimilarity. This process continues until each data point is in its own cluster.

Like agglomerative clustering, the key to divisive clustering is the measure of dissimilarity. The same measures used in agglomerative clustering can be used in divisive clustering, but they are interpreted as measures of dissimilarity rather than similarity.

Applications

Hierarchical clustering has a wide range of applications. It is used in many fields, including biology, medicine, social sciences, and market research. Some specific applications include:

- Genomic data analysis: Hierarchical clustering is used to group genes based on their expression patterns.

- Customer segmentation: Businesses use hierarchical clustering to group customers based on their purchasing behavior.

- Social network analysis: Hierarchical clustering can be used to identify communities within a social network.

Limitations

While hierarchical clustering is a powerful tool, it has some limitations. These include:

- Sensitivity to the choice of similarity measure: The results of hierarchical clustering can vary greatly depending on the choice of similarity measure.

- Difficulty handling large datasets: Hierarchical clustering can be computationally expensive and may not scale well to large datasets.

- Lack of a global objective function: Unlike some other clustering methods, hierarchical clustering does not optimize a global objective function. This can make it difficult to assess the quality of the clustering.

Conclusion

Hierarchical clustering is a versatile and intuitive method of cluster analysis. While it has some limitations, its ability to generate a hierarchical representation of data makes it a valuable tool in many fields.