Hadoop

Introduction

Hadoop is an open-source software framework designed for distributed storage and processing of large datasets using the MapReduce programming model. It is part of the Apache project and is written in the Java programming language.

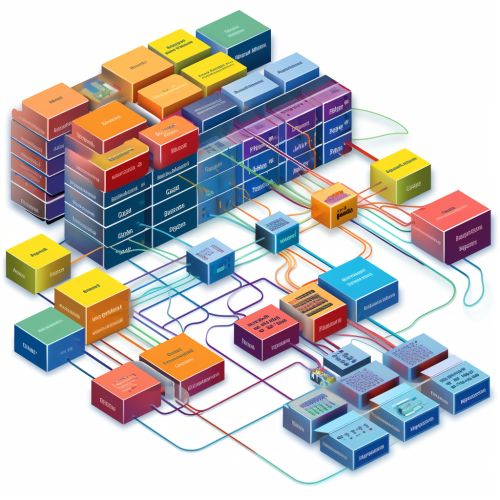

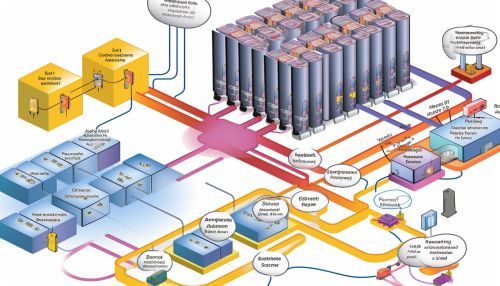

Architecture

Hadoop's architecture is designed to scale up from a single server to thousands of machines, each offering local computation and storage. It includes the Hadoop Common package, which provides filesystem and OS level abstractions, the Hadoop YARN framework for job scheduling, the Hadoop Distributed File System (HDFS), and the Hadoop MapReduce implementation.

Hadoop Common

Hadoop Common, also known as Hadoop Core, is the collection of common utilities and libraries that support other Hadoop modules. It is an essential part or 'glue' of the Hadoop ecosystem that contains the necessary Java Archive (JAR) files and scripts needed to start Hadoop.

Hadoop YARN

Hadoop YARN (Yet Another Resource Negotiator) is a sub-project of Hadoop that provides a framework for job scheduling and cluster resource management. It allows multiple data processing engines such as interactive processing, graph processing, and batch processing to handle data stored in a single platform, unlocking an entirely new approach to analytics.

Hadoop Distributed File System (HDFS)

The Hadoop Distributed File System (HDFS) is a distributed file system designed to run on commodity hardware. It is highly fault-tolerant and is designed to be deployed on low-cost hardware. HDFS provides high throughput access to application data and is suitable for applications that have large data sets.

Hadoop MapReduce

Hadoop MapReduce is a software framework for easily writing applications that process vast amounts of data (multi-terabyte data-sets) in-parallel on large clusters (thousands of nodes) of commodity hardware in a reliable, fault-tolerant manner.

Use Cases

Hadoop is used in many industries and for a variety of applications. These include log and/or clickstream analysis of various kinds, marketing analytics, machine learning and/or sophisticated data mining, image processing, processing of XML messages, web crawling and/or text processing, and general archiving, including of relational/tabular data, e.g. for compliance.

Limitations and Challenges

Despite its many benefits, Hadoop also has several limitations and challenges. These include data security, complex programming model, slow processing speed for small data, and lack of standards.