Gradient Descent

Introduction

Gradient Descent is a first-order optimization algorithm commonly used in machine learning and artificial intelligence. It is a method to find the minimum of a function. Specifically, it is used to update the parameters of a model, such as coefficients in linear regression or weights in neural networks, with the goal of minimizing the loss function.

Mathematical Background

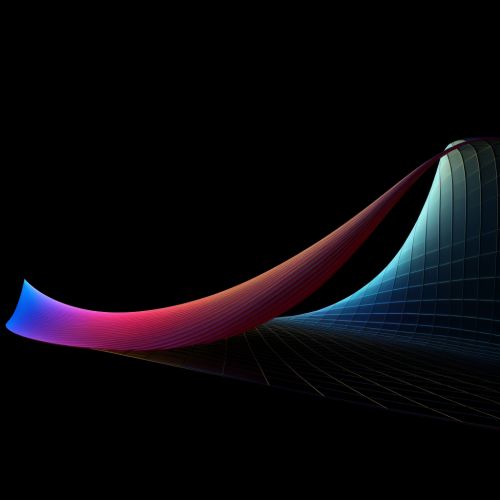

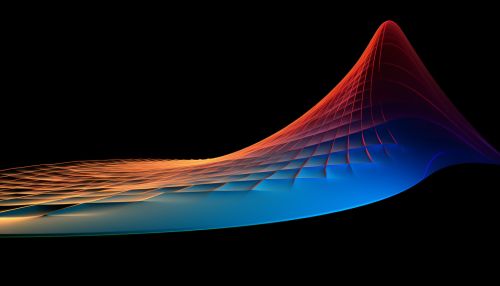

The idea of Gradient Descent is rooted in calculus, where the derivative of a function gives the slope of the function at a given point. In a multivariate setting, the gradient of a function gives the direction of the steepest ascent. By moving in the opposite direction (i.e., the direction of steepest descent), we can iteratively reach the minimum of the function.

Algorithm

The Gradient Descent algorithm involves the following steps:

- Initialize the parameters with some values, often randomly.

- Calculate the gradient of the loss function with respect to each parameter.

- Update the parameters in the opposite direction of the gradient, scaled by a learning rate.

- Repeat steps 2 and 3 until the parameters converge or a maximum number of iterations is reached.

The learning rate is a hyperparameter that determines the step size at each iteration while moving towards the minimum of the function. A smaller learning rate could slow down the convergence, while a larger learning rate could overshoot the minimum and cause the algorithm to diverge.

Types of Gradient Descent

There are three main types of Gradient Descent, each with their own advantages and disadvantages:

- Batch Gradient Descent: The entire training set is used to compute the gradient at each step. This can be computationally expensive for large datasets, but it provides a stable and accurate estimate of the gradient.

- Stochastic Gradient Descent: The gradient is computed using a single training example at each step. This can be faster and can help escape local minima, but the estimates of the gradient can be noisy and less accurate.

- Mini-Batch Gradient Descent: A compromise between Batch and Stochastic Gradient Descent. The gradient is computed using a small batch of training examples. This can provide a balance between computational efficiency and gradient accuracy.

Convergence and Learning Rate

The convergence of the Gradient Descent algorithm depends on the choice of the learning rate and the properties of the loss function. If the learning rate is too high, the algorithm might overshoot the minimum and diverge. If the learning rate is too low, the algorithm might converge slowly or get stuck in a local minimum.

In practice, the learning rate is often chosen using a line search or a learning rate schedule, which gradually decreases the learning rate over time. The latter is often used in machine learning applications, where the learning rate is decreased after each epoch (a complete pass through the training data).

Applications

Gradient Descent is widely used in machine learning and artificial intelligence for training models such as linear regression, logistic regression, and neural networks. It is also used in other optimization problems, such as portfolio optimization in finance and image reconstruction in computer vision.