Bayesian networks

Introduction

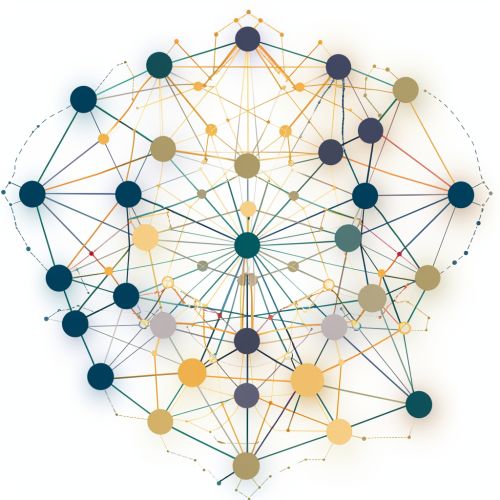

A Bayesian network, also known as a belief network, Bayes network, or Bayes(ian) model, is a probabilistic graphical model that represents a set of variables and their conditional dependencies via a directed acyclic graph (DAG). Bayesian networks are ideal for taking an event that occurred and predicting the likelihood that any one of several possible known causes was the contributing factor. They are widely used in machine learning, artificial intelligence, statistics, and bioinformatics.

History

The concept of Bayesian networks is named after the Reverend Thomas Bayes (1701–1761), who provided the first mathematical treatment of a non-trivial problem of statistical data analysis using what is now known as Bayesian inference. Bayesian networks were later formally defined by Pearl (1985) and Neapolitan (1989). They have been applied in a wide range of practical applications, including medical diagnosis, image recognition, speech recognition, natural language processing, and bioinformatics.

Mathematical Definition

A Bayesian network is a directed acyclic graph whose nodes represent a set of random variables. Nodes can be any type of variable, whether they are observable quantities, latent variables, unknown parameters or hypotheses. Edges represent conditional dependencies; nodes that are not connected represent variables that are conditionally independent of each other. Each node is associated with a probability function that takes, as input, a particular set of values for the node's parent variables, and gives (as output) the probability of the variable represented by the node.

Inference

Inference in Bayesian networks involves determining the posterior probabilities of the unknown quantities given the observed quantities. This can be done using various methods, such as the junction tree algorithm, variable elimination, stochastic simulation / Monte Carlo methods, or the use of a Markov chain Monte Carlo (MCMC) method.

Learning

Learning in Bayesian networks can be divided into two main tasks: parameter learning and structure learning. Parameter learning involves learning the parameters (i.e., the conditional probability distributions) of the network given a fixed structure. Structure learning involves learning the structure of the network given a set of data. Both tasks can be achieved using various algorithms, such as the Expectation-Maximization (EM) algorithm for parameter learning and the K2 algorithm for structure learning.

Applications

Bayesian networks have been applied in a wide range of fields, including machine learning, artificial intelligence, statistics, bioinformatics, and medical diagnosis. They are particularly useful in areas where one needs to reason under uncertainty. For example, in medicine, Bayesian networks can be used to predict the probability of a disease given a set of symptoms.

Limitations

Despite their wide range of applications, Bayesian networks do have some limitations. They assume that the variables are conditionally independent given their parents in the graph, which may not always be the case in real-world applications. They also require a large amount of data to accurately learn the network structure and parameters, which may not always be available.