Activation Function: Difference between revisions

No edit summary |

No edit summary |

||

| Line 3: | Line 3: | ||

An activation function is a crucial component in artificial neural networks, particularly in the context of [[deep learning]] and [[machine learning]]. Its primary role is to introduce non-linearity into the network, enabling it to learn and model complex patterns in the data. This article delves into the various types of activation functions, their mathematical formulations, properties, and applications in neural networks. | An activation function is a crucial component in artificial neural networks, particularly in the context of [[deep learning]] and [[machine learning]]. Its primary role is to introduce non-linearity into the network, enabling it to learn and model complex patterns in the data. This article delves into the various types of activation functions, their mathematical formulations, properties, and applications in neural networks. | ||

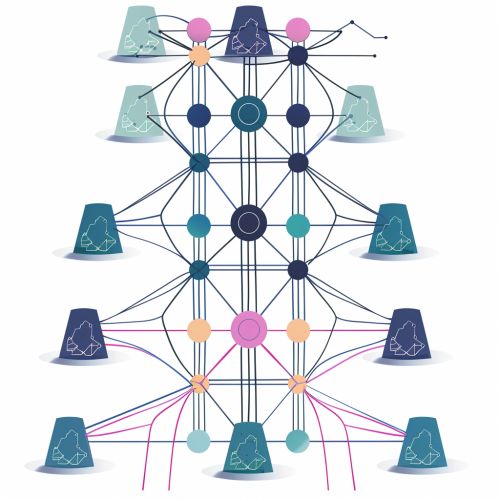

[[Image:Detail-79295.jpg|thumb|center|A neural network diagram showing nodes and connections, with activation functions applied at each node.]] | [[Image:Detail-79295.jpg|thumb|center|A neural network diagram showing nodes and connections, with activation functions applied at each node.|class=only_on_mobile]] | ||

[[Image:Detail-79296.jpg|thumb|center|A neural network diagram showing nodes and connections, with activation functions applied at each node.|class=only_on_desktop]] | |||

== Types of Activation Functions == | == Types of Activation Functions == | ||

Latest revision as of 23:21, 17 May 2024

Activation Function

An activation function is a crucial component in artificial neural networks, particularly in the context of deep learning and machine learning. Its primary role is to introduce non-linearity into the network, enabling it to learn and model complex patterns in the data. This article delves into the various types of activation functions, their mathematical formulations, properties, and applications in neural networks.

Types of Activation Functions

Activation functions can be broadly categorized into several types based on their characteristics and applications. The most commonly used activation functions include:

Linear Activation Function

The linear activation function is defined as: \[ f(x) = x \] While simple, it is rarely used in practice because it does not introduce non-linearity into the model. As a result, networks using linear activation functions are limited in their ability to model complex data.

Sigmoid Activation Function

The sigmoid activation function is given by: \[ f(x) = \frac{1}{1 + e^{-x}} \] This function maps input values to a range between 0 and 1, making it useful for binary classification tasks. However, it suffers from the vanishing gradient problem, where gradients become very small for large positive or negative inputs, slowing down the learning process.

Hyperbolic Tangent (Tanh) Activation Function

The tanh activation function is defined as: \[ f(x) = \tanh(x) = \frac{e^x - e^{-x}}{e^x + e^{-x}} \] This function maps input values to a range between -1 and 1. It is zero-centered, which can lead to faster convergence compared to the sigmoid function. However, it also suffers from the vanishing gradient problem.

Rectified Linear Unit (ReLU)

The ReLU activation function is given by: \[ f(x) = \max(0, x) \] ReLU is widely used in modern neural networks due to its simplicity and effectiveness. It introduces non-linearity while avoiding the vanishing gradient problem. However, it can suffer from the "dying ReLU" problem, where neurons can become inactive and stop learning if they receive negative inputs.

Leaky ReLU

Leaky ReLU addresses the dying ReLU problem by allowing a small, non-zero gradient for negative inputs: \[ f(x) = \begin{cases} x & \text{if } x \geq 0 \\ \alpha x & \text{if } x < 0 \end{cases} \] where \(\alpha\) is a small constant, typically 0.01.

Parametric ReLU (PReLU)

PReLU is a variant of ReLU where the slope of the negative part is learned during training: \[ f(x) = \begin{cases} x & \text{if } x \geq 0 \\ \alpha x & \text{if } x < 0 \end{cases} \] Unlike Leaky ReLU, \(\alpha\) is a learnable parameter.

Exponential Linear Unit (ELU)

The ELU activation function is defined as: \[ f(x) = \begin{cases} x & \text{if } x \geq 0 \\ \alpha (e^x - 1) & \text{if } x < 0 \end{cases} \] where \(\alpha\) is a positive constant. ELU aims to combine the benefits of ReLU and address its limitations by smoothing the negative part.

Softmax Function

The softmax function is used in the output layer of neural networks for multi-class classification: \[ f(x_i) = \frac{e^{x_i}}{\sum_{j} e^{x_j}} \] It converts raw scores (logits) into probabilities, ensuring that the sum of the output probabilities is 1.

Mathematical Properties

Activation functions possess several important mathematical properties that influence their performance and suitability for different tasks:

Differentiability

Most activation functions are differentiable, allowing the use of gradient-based optimization methods such as backpropagation. This property is crucial for training neural networks.

Non-linearity

Non-linear activation functions enable neural networks to approximate complex functions and model intricate patterns in the data. Without non-linearity, a neural network would be equivalent to a single-layer perceptron, limiting its expressive power.

Computational Efficiency

Activation functions should be computationally efficient to evaluate, as they are applied to every neuron in the network. Functions like ReLU are preferred for their simplicity and speed.

Saturation Regions

Some activation functions, like sigmoid and tanh, have saturation regions where the gradient approaches zero. This can slow down learning, especially in deep networks. ReLU and its variants mitigate this issue by maintaining a non-zero gradient for positive inputs.

Applications in Neural Networks

Activation functions play a pivotal role in various types of neural networks, including:

Feedforward Neural Networks

In feedforward neural networks, activation functions are applied to the output of each neuron in the hidden layers. The choice of activation function can significantly impact the network's ability to learn and generalize from data.

Convolutional Neural Networks (CNNs)

CNNs use activation functions like ReLU and its variants to introduce non-linearity after convolutional layers. This helps the network learn hierarchical features from images, making them effective for tasks like image classification and object detection.

Recurrent Neural Networks (RNNs)

RNNs, used for sequential data, often employ activation functions like tanh and sigmoid. However, these functions can lead to vanishing gradients, prompting the use of more advanced architectures like Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) that incorporate gating mechanisms to mitigate this issue.

Generative Adversarial Networks (GANs)

GANs consist of a generator and a discriminator network, both of which use activation functions to learn complex data distributions. The choice of activation function can affect the stability and performance of GAN training.

Challenges and Considerations

While activation functions are essential for neural network performance, they also present several challenges and considerations:

Vanishing and Exploding Gradients

Activation functions like sigmoid and tanh can lead to vanishing gradients, where gradients become very small, slowing down learning. Conversely, functions with unbounded outputs can cause exploding gradients, where gradients become excessively large. Techniques like gradient clipping and advanced architectures help address these issues.

Dying Neurons

ReLU and its variants can suffer from the dying neuron problem, where neurons become inactive and stop learning. Leaky ReLU and PReLU are designed to mitigate this issue by allowing a small gradient for negative inputs.

Choosing the Right Activation Function

The choice of activation function depends on the specific task and architecture. Experimentation and empirical evaluation are often necessary to determine the most suitable function for a given problem.

Future Directions

Research on activation functions continues to evolve, with new functions being proposed to address the limitations of existing ones. Some promising directions include:

Swish Activation Function

The Swish activation function, defined as: \[ f(x) = x \cdot \text{sigmoid}(x) \] has shown promising results in various tasks, outperforming ReLU in some cases.

Mish Activation Function

The Mish activation function is given by: \[ f(x) = x \cdot \tanh(\text{softplus}(x)) \] where \(\text{softplus}(x) = \ln(1 + e^x)\). Mish has been found to improve performance in certain deep learning models.

Adaptive Activation Functions

Adaptive activation functions, where the parameters of the function are learned during training, offer a flexible approach to activation. Examples include PReLU and other learnable variants.